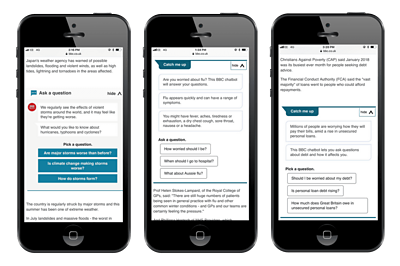

Over the past year, the BBC has piloted a series of in-article chatbots, which are designed to give less avid news-readers background information on major stories. Currently, the text for these bots is scripted by journalists. My task this summer was to see whether we could automate the question-answering process based on information already contained within a news article.

Question answering (QA) is sometimes called an AI-Complete problem. That is, computationally speaking, it is as hard as solving any of the problems central to artificial intelligence. When you dig into it, there are many complex steps that you can easily overlook as a human question-answerer. In this article I will explain some of the issues that make question-answering so difficult, particularly when it comes to questions about current events.

How worried should I be?

Question-answering chatbots have been released in other domains, so what makes the news any different? Several companies, such as the digital banking start-ups Monzo and Revolut have released successful in-app chatbots which handle user queries and try to point them to useful answers. However, in comparison to news, these bots are responsible for very small domains: user queries are about specific topics relating to that service, and the companies already have their own human-written FAQs or customer service logs containing pre-formed questions and answers.

By contrast, the questions that can be asked about the news are vastly more open-ended, and there is no ready store of questions or answers. For example, in January of this year, the BBC published an article about the winter flu outbreak featuring an in-article chatbot with several questions and answers written by a journalist. One of these questions in particular caught my eye:

How worried should I be?

The first answer that comes to my mind is, "Well, that depends...". And the journalist's answer captures exactly that:

Most people get better in a week and just need a bit of bed rest, some paracetamol or ibuprofen and plenty of fluids. But if you're very old, very young or have pre-existing health conditions such as heart disease — flu can be deadly.

Let's try to understand this question from the perspective of a fully automated bot. Why would a bot assume that there isn't a single cover-all answer — that the answer may vary depending on a person's circumstances? Where does the concept of readers' possible worry come from in the first place? But there is a more fundamental issue with this question which is very easy to overlook. The question doesn't state what the worry is about.

There are multiple, very-human leaps of inference between reading this article and asking, "how worried should I be?". First of all, the article is about the flu. Specifically, it is about other people getting the flu. There is nothing explicit to suggest that the reader could get the flu.

We could ignore that issue, and assume our bot has the knowledge that people get the flu. But why should the flu be worrying? Our bot would need further knowledge of the world outside this news article to know that flu is bad, as there is nothing explicit in the article to suggest this.

It is only at this point, after making the inferences:

other people have flu -> I could get flu -> flu is bad

that it would then be reasonable to be worried. However, the article lacks the information for a bot to make these connections without understanding more about the world around us — let alone answer the question.

Types of question

The example above illustrates some of the issues that arise when trying to understand a question using automation or artificial intelligence, which is a very large part of the challenge of question-answering in artificial intelligence. But this can be further complicated by the phrasing and type of question. Some types of question are significantly harder to understand and reason about than others.

Several years ago, a team at Facebook AI Research published a paper suggesting a set of proxy tasks as prerequisites to full question answering. In this paper, the authors suggest a set of 20 different types of questions that each require different levels of reasoning in order to answer. Here are a few of the categories, which help to shed light on some of the issues.

Single supporting fact

Who is X?

This is the simplest type of question, where there is a single statement that directly answers a question.

These questions should be relatively simple to answer. However, even questions such as "Who is X" can be easier or harder depending on how the question is framed. "Who is Theresa May" should be easier to answer than "Who is the PM (or Prime Minister)". Many countries have Prime Ministers, and the person filling this role changes over time.

Time reasoning

Who was the Prime Minister before Theresa May?

This involves understanding the question semantics beyond simply identifying the question as a "who" question and the role, "Prime Minister", to search for.

Multiple supporting facts

Are Apple's stocks cheaper than Microsoft's?

This question requires multiple layers of understanding in order to answer:

- Understanding the question is a comparison of two values

- Finding an answer to the price of Apple's stocks

- Finding an answer to the price of Microsoft's stocks. This additionally involves interpreting less than as a comparative conjunction, where the key noun stocks is dropped from the second half. This is required in order to understand what it is about Microsoft (the stocks) that should be looked up.

- Calculating the difference and return the appropriate answer.

So what do audiences actually ask?

In practice, what do audiences of BBC News actually ask? In order to investigate this question, I spoke with the New News team — a part of the Internet Research and Future Services (IRFS) group within BBC R&Dconducting preliminary research on innovative formats for news. From their findings, it seems like people are broadly interested in three types of question:

1. Simple fact based questions to fill in their gaps in knowledge

News articles can require a lot of assumed knowledge that a given reader may not have. The team found that people think news outlets often don't go back to the start of the story to explain things clearly.

2. "Update me" questions

A catch-up to bring a user up to speed on what they have missed in a given time period.

3. Personal impact

How does this topic affect the user? This includes questions like our first example, "How worried should I be?"

This is a useful high-level overview, but in practice it is also necessary to have exact examples of what people want. To get a deeper insight into the questions that readers may ask when reading specific news articles, I evaluated a set of questions provided by the New News team that readers had, or that they thought readers had, on three different news articles from three different domains: Brexit, the Grenfell Tower inquiry, and Elon Musk.

With this set of questions, I assessed the feasibility of automatic question answering by considering whether a human would be able to provide an answer to the question from an article alone or from all published BBC News articles.

The results? Very few of the provided questions could be answered by the respective article that prompted them. Moreover, access to all BBC News articles rarely aided the answering of these questions. Many of them covered fundamental concepts: news articles provide a rolling update on an issue, but there seems to be a lack of articles that go back to basics to explain the underlying ideas. For example, I couldn't find a clear answer to "What is Chequers" (the name of the country home of the Prime Minister, where key Brexit negotiations have taken place)in any BBC News article. There are, however, multiple articles that convey the idea that it is some kind of location and/or meeting. Other example questions are vague for a variety of different reasons. Consider:

Will there be an election?

This is presumably referring to a UK election, but this is an assumption based on subject matter of the article that evoked this question. Also taken at face-value, the answer to this question is simply "yes". The question is really asking if there will be another snap election.

The main problem here is the ambiguity of the question rather than the search for an answer. In addition, there is no definite answer to this question at the moment — only the opinions of various public figures.

What does PM stand for?

There is ambiguity around which sense of the phrasal verb stand for is intended: stand for referring either to the abbreviation "PM", or the more informal sense of support/allow etc – "What does the PM support?"

In the setting of a chatbot conversation, people are less likely to ask clear questions. In any dialogue, spoken or written, a context builds up between the participants involved. The speakers (or writers) often refer back to the salient concepts that all participants have access to in more context-sensitive ways —for example, referring back entities via pronouns (he/she/it). As such, it becomes more likely that people will ask questions referring to the people or places that have been previously mentioned, without being explicit.

Conclusion

Question answering is extremely challenging. Here I have only talked about some of the difficulties in understanding the meaning of a question; finding content that suitably answers this interpreted meaning is another issue entirely. The key takeaway for me is that people ask questions because the answer is not there, or at least not easy to find. This makes the task of automating a question answering service very difficult.

However, it is useful to understand why question answering is hard, in order to understand what is possible. I have also highlighted the fact that people ask difficult questions. Difficult because of the way they are posed, because they are open ended, or even because the answer cannot be found anywhere in the news.

It is important to recognise here too that these gaps in information are not the fault or responsibility of any one journalist. Explaining every concept in every news article from first principles would be a near-impossible task. We're hopeful that by narrowing the scope and application of automated question-answering, we can begin testing how helpful the service might be to online news readers. In my next piece, I'll present some of the prototypes I developed with the help of News Labs engineers that may present possible use cases for the technology in the future.

Latest news

Read all newsBBC News Labs

-

News

Insights into our latest projects and ways of working -

Projects

We explore how new tools and formats affect how news is found and reported -

About

About BBC News Labs and how you can get involved -

Follow us on X

Formerly known as Twitter