It all started with a Hack: February 2017

The original idea of getting BBC News via voice devices was born out of a BBC Hack event in early 2017.

Over 48 hours we designed an “Action” to allow Google Home to read out articles from the BBC News front page and topic pages. For the first time, we knew we could enable users to hear the top stories from the BBC News website via Google Home. It was pretty basic, and none of the content was customised for voice.

But it worked.

We proved that it was technically possible for users to ask for news about a certain topic. In early demos we we used Brexit and the supermarket Tescos as example topics.

Our hypothesis was that voice would be a more convenient way for some people to get the news that they wanted instead of doing complicated searches on the website.

We liked it, so we continued the build

After the hack we thought we were on to a winner so we developed the idea further in Labs and did some guerrilla testing along the way.

We demoed this early prototype to the BBC’s Executive Committee, including Director General, Tony Hall.

It went well, but it became obvious that text articles read out by a synthetic voice wasn’t the most engaging way of building the experience. More work was needed.

Becoming a project

One of the benefits of working in News Labs is that we can work on developing new ideas from end to end.

We quickly realised that we would need to work on three areas:

- An audience experience - building a prototype Alexa Skill or Google Action.

- A prototype production tool, allowing journalists to prepare the material.

- A way of connecting those two prototypes.

Iteration 1: May 2017

In the early days, we took inspiration from radio, rather than dreaming up original voice-first content.

In a workshop, we came to the consensus that the easiest and most familiar way of interacting with audio content would be a playlist, offering chapterised or segmented broadcast content.

This is the point where we made it a proper project with clear objectives. To me, this was very exciting, encouraging and motivating, not only because Voice was still a relatively new area to explore at the time, but also because it was one of the few times I’d seen a hack prototype become a fully fledged stand-alone project.

We switched to the Amazon Alexa platform from our original prototype mainly because at the time the Google Actions software development kit didn’t support playing long form audio.

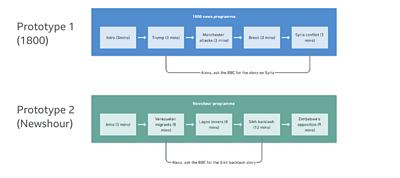

We prototyped two Alexa Skills to discover how users interacted with voice and whether there was much appetite for the service.

- A Newshour Skill (with longer pieces drawn from Newshour on the BBC World Service)

- A “1800” prototype (shorter news updates drawn from Radio 4’s Six O’Clock News programme).

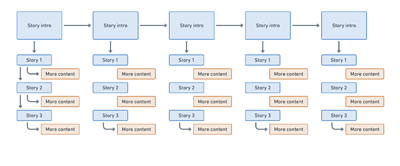

Below is an illustration of the flow of the two prototypes:

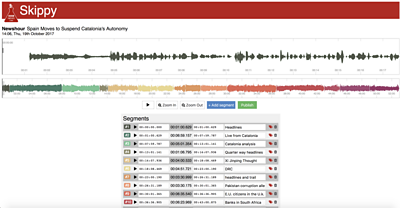

Aside from the Alexa Skill, we made a bespoke web editor to support the production of the content, mainly offering the functionality of semi-automated story segmentation based on running order and also the ability to tag content. Here’s a screenshot of the web editor which was made by my wonderful colleagues Jonty Usborne, Tamsin Green and James Dooley:

We learnt that there was a clear appetite for skippable, searchable audio news. Audiences sought a pacier, up-to-date bulletin and found that shorter content made the 1800 prototype easier to navigate. Finally, real, human voices appealed and that a range of accents could give the prototype a more authentic feel.

Technical limitations:

We found that Amazon Automatic Speech Recognition (ASR) wasn’t mature enough to recognise all the spoken topics. For example, “Harvey Weinstein” was misunderstood by Alexa as “hobby wine stain”, “have you wine stain”, “hyvee Wayne stein”, “ask about Harvey wasting”.

Iteration 2: Feb 2018

While iteration one focused on the proposition, user needs and expectations, this iteration focused on interaction, usability and functionality.

Based on our learnings from the first prototype and due to the limitations of technology on the platform, especially ASR and Natural Language Understanding (NLU), we decided to remove the ability to search for topics.

We instead trialled bespoke content for the Voice platform, working closely with a team in the BBC that was experimenting with content for voice.

We made another prototype with bespoke content created by the Radio 5live team, testing one prototype with two variants of the introduction messaging and the number of sections.

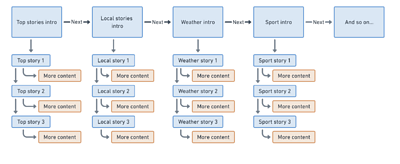

This time, apart from offering the basic playback control, we also introduced the new feature of “asking more” so that users have the opportunity to dig deeper into a story.

This was a feature that made it all the way into production and one that audiences use all the time.

Below is an illustration of the flow:

We learnt that users naturally used commands such as play, pause, resume, skip, next and (to an extent) back. After some time spent with the skill, most had gathered a sense of the different news sections (though not all were consciously aware of the ability to skip whole sections). The content style and tone appealed too to the bulk of listeners.

Not all was good, however. Some of the specifics of the platform were unintuitive to listeners. Some never managed to access ‘more’ during the home study with the command “Alexa, ask Voice News for more”. The biggest pain point was the unintentional triggering of the Flash Briefing, which for some caused extreme frustration.

Iterations 3 & 4: May 2018

At this stage, we wanted to know more about what content people preferred and the tone of voice they would like. We did some lab testing before trialling 5 different content formats with users over 15 days.

1. Traditional

News was arranged into sections: Main news, Local, Sport, Entertainment and Weather. Each section began with headlines then proceeded to cover those stories in detail.

2. 10 things you need to know

This included section messages but no traditional headlines. Content included: three national or international news items, two local stories, two sport, two entertainment and a weather report.

3. 10 stories in 10 minutes

10 short news stories, plus Local, Sport and Weather items.

4. Major event

Coverage focused on Royal Wedding and FA Cup final.

5. Themed listicles

Social media themed with a relaxed tone. Structurally, content was arranged into: three things the nation and world need to know, three things to make you smile, The "FOMO 3”, sport and weather.

Content was generally well received, but some of the “banter” between presenters in some of the formats overstepped the mark for some. We saw also that morning users seem to prefer shorter bulletins and were less likely to use the "more" function. No format was a clear winner.

Time to let go: September 2018

After four iterations in 18 months, we knew voice controlled news had huge potential, which meant that it was time to find a proper home for the prototype. As we were already engaging with the editorial team in the BBC Voice + AI department, we had this team as a clear destination.

After a lot of knowledge sharing sessions, this team took on the prototype and made it ready for 24 hour a day production.

On a personal note, the journey didn’t end there. I moved too, to the Voice + AI team.

While busying myself on other projects, I got to watch from afar as something I had worked on for months was developed in a production-ready way.

I learnt some lessons too, this time around how best to transfer an innovative prototype to a team that can support it.

Lessons we’ve learnt for transferring a prototype to a production team:

- Identify and engage with the destination teams as early as possible and as frequently as possible

- Agree what you’re going to transfer. In our case, we were not going to transfer the whole tech stack. Instead, we focused on the transfer of our learnings - both learnings of the users and technical limitations.

Then it went live: December 2019

To me, this definitely felt like a long-awaited baby finally being born.

I’m really proud that we took a prototype that was born out of a hack event and then developed it all the way to the stage where a team wanted to ready it for production.

It’s great that it’s finally in front of real audiences and making a positive impact on their everyday life, which is something I always wanted to focus on since I joined the BBC News.

It feels a little like a dream coming true.

This post is part of a three-part series about BBC Voice + AI. The other updates are here and here.

Latest news

Read all newsBBC News Labs

-

News

Insights into our latest projects and ways of working -

Projects

We explore how new tools and formats affect how news is found and reported -

About

About BBC News Labs and how you can get involved -

Follow us on X

Formerly known as Twitter