Aims

How can we use transcription technology to help journalists cover breaking news stories?

Outline

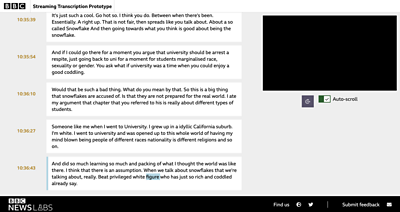

Our Streaming Transcription prototype is a web application that transcribes incoming audio and video feeds in real time.

It's built using a transcription engine trained on BBC data, which has been specifically optimised for handling streaming media.

Why?

We already know that there's huge demand in the newsroom for being able to monitor and navigate through audio and video files using automatically generated transcripts. Our work on file-based transcription — where journalists upload a recording and wait for a transcript to be generated — has recently been transferred into production.

We wanted to explore whether we could build a similar tool, but for streaming media feeds.

One of the BBC's top priorities for digital news is being the best at delivering breaking stories. We know that the teams producing our live pages currently need to allocate journalists to manually transcribe quotes from events such as press conferences. We thought: could we make monitoring live event feeds easier?

Luckily, our colleagues in BBC Research and Development have already developed a live transcription engine that's optimised for handling streaming media. However, it hasn't been tested in the newsroom yet.

This project gives us the opportunity to evaluate the speed and accuracy of this streamlined speech-to-text service, and feed back to our colleagues in R&D with our findings.

Next steps

- We will run a trial with journalists in the newsroom to test whether the speed and quality of the transcription is good enough to be useful when covering breaking news

- If the speed and accuracy is good enough, we will look to improve the design of our prototype and add additional features before running additional user testing

A special thanks to Matt Haynes, Misa Ogura and Ben Clark from BBC R&D for their work on streaming Kaldi.

Results

- We've completed a prototype tool which we will test with journalists to understand whether the transcription is fast and accurate enough to be used when covering breaking news.

Team

Similar projects

View all speech to text projectsBBC News Labs

-

News

Insights into our latest projects and ways of working -

Projects

We explore how new tools and formats affect how news is found and reported -

About

About BBC News Labs and how you can get involved -

Follow us on X

Formerly known as Twitter